With free esxi over, not shocking bit sad, I am now about to move away from a virtualisation platform i’ve used for a quarter of a century.

Never having really tried the alternatives, is there anything that looks and feels like esxi out there?

I don’t have anything exceptional I host, I don’t need production quality for myself but in all seriousness what we run at home end up at work at some point so there’s that aspect too.

I try to stick with libvirt/virsh when I don’t need any graphical interface (integrates beautifully with ansible [1]), or when I don’t need clustering/HA (libvirt does support “clustering” at least in some capability, you can live migrate VMs between hosts, manage remote hypervisors from virsh/virt-manager, etc). On development/lab desktops I bolt virt-manager on top so I have the exact same setup as my production setup, with a nice added GUI. I heard that cockpit could be used as a web interface but have never tried it.

Proxmox on more complex setups (I try to manage it using ansible/the API as much as possible, but the web UI is a nice touch for one-shot operations).

Re incus: I don’t know for sure yet. I have an old LXD setup at work that I’d like to migrate to something else, but I figured that since both libvirt and proxmox support management of LXC containers, I might as well consolidate and use one of these instead.

The “clustering” in libvirt is limited to remote controlling multiple nodes, and migrating hosts between them. To get the High Availability part you need to set it up through other means, e.g. pacemaker and a bunch of scripts.

Re incus: I don’t know for sure yet. I have an old LXD setup at work that I’d like to migrate to something else, but I figured that since both libvirt and proxmox support management of LXC containers, I might as well consolidate and use one of these instead.

Maybe you should consider consolidating into Incus. You’re already running on LXC containers why keep using and dragging all the Proxmox bloat and potential issues when you can use LXD/Incus made by the same people who made LXC that is WAY faster, stable, more integrated and free?

Hey look, it’s the Incus guy. Every time this topic comes up, you chime in and roast Proxmox and it potential issues with a link go a previous comment roasting Proxmox and it’s potential issues and at no point go into what those potential issues are outside of the broad catch all term of ‘bloat’.

I respect your data center experience, but I wish you were more forward with your issues instead of broad, generalized terms.

As someone with much less enterprise experience, but small business it administration experience, how does Incus replace ESXi for virtual machines coming from the understanding that “containerization is the new hotness but doesn’t work for me” angle?

Now, I’m on my phone so I can’t write that much but I’ll say that the post I liked to isn’t about potential issue, it goes over specific situations where it failed, ZFS, OVPN, etc. but I won’t obviously provide anyone with crash logs and kernel panics.

About ESXi: Incus provides you with a CLI and Web interface to create, manage, migrate VMs. It also provides basic clustering features. It isn’t as feature complete as ESXi but it gets the job done for most people who just want a couple of VMs. At the end of the day it is more inline with what Proxmox than what ESXi offers BUT it’s effectively free so it won’t hold important updates from users running on free licenses.

If you list what you really need in terms of features I can point you into documentation or give my opinion how how they compare and what to expect.

The migration is bound to happen in the next few months, and I can’t recommend moving to incus yet since it’s not in stable/LTS repositories for Debian/Ubuntu, and I really don’t want to encourage adding third-party repositories to the mix - they are already widespread in the setup I inherited (new gig), and part of a major clusterfuck that is upgrade management (or the lack of). I really want to standardize on official distro repositories. On the other hand the current LXD packages are provided by snap (…) so that would still be an improvement, I guess.

Management is already sold to the idea of Proxmox (not by me), so I think I’ll take the path of least resistance. I’ve had mostly good experiences with it in the past, even if I found their custom kernels a bit strange to start with… do you have any links/info about the way in which Proxmox kernels/packages differ from Debian stable? I’d still like to put a word of caution about that.

DO NOT migrate / upgrade anything to the snap package that package is from Canonical and it’s after the Incus fork, this means if you do for it you may never be able to then migrate to Incus and/or you’ll become hostage of Canonical.

About the rest, if you don’t want to add repositories you should migrate into LXD LTS from Debian 12 repositories. That version is and will be compatible with Incus and both the Incus and Debian teams have said that multiple times and are working on a migration path. For instance the LXD from Debian will still be able to access the Incus image server while the Canonical one won’t.

DO NOT migrate / upgrade anything to the snap package

It was already in place when I came in (made me roll my eyes), and it’s a mess. As you said, there’s no proper upgrade path to anything else. So anyway…

you should migrate into LXD LTS from Debian 12 repositories

The LXD version in Debian 12 is buggy as fuck, this patch has not even been backported https://github.com/canonical/lxd/issues/11902 and 5.0.2-5 is still affected. It was a dealbreaker in my previous tests, and doesn’t inspire confidence in the bug testing and patching process on this particular package. On top of it, It will be hard to convice other guys that we should ditch Ubuntu and their shenanigans, and that we should migrate to good old Debian (especially if the lxd package is in such a state). Some parts of the job are cool, but I’m starting to see there’s strong resistance to change, so as I said, path of least resistance.

Do you have any links/info about the way in which Proxmox kernels/packages differ from Debian stable?

So you say it is “buggy as fuck” because there’s a bug that makes it so you can’t easily run it if your locate is different than English? 😂 Anyways you can create the bride yourself and get around that.

“buggy as fuck” because there’s a bug that makes it so you can’t easily run it if your locate is different than English?

It sends pretty bad signals when it causes a crash on the first lxd init (sure I could make the case that there are workarounds, switch locales, create the bridge, but it doesn’t help make it appear as a better solution than proxmox). Whatever you call it, it’s a bad looking bug, and the fact that it was not patched in debian stable or backports makes me think there might be further hacks needed down the road for other stupid bugs like this one, so for now, hard pass on the Debian package (might file a bug on the bts later).

About the link, Proxmox kernel is based on Ubuntu, not Debian…

Thanks for the link mate, Proxmox kernels are based on Ubuntu’s, which are in turn based on Debian’s, not arguing about that - but I was specifically referring to this comment

having to wait months for fixes already available upstream or so they would fix their own shit

any example/link to bug reports for such fixes not being applied to proxmox kernels? Asking so I can raise an orange flag before it gets adopted without due consideration.

OP here.

Thanks for all your input!

It’s really an embarrasment of riches which will take me some time to navigate. But thats part of the fun, right?

Again, thanks everyone.

As part of the transition of perpetual licensing to new subscription offerings, the VMware vSphere Hypervisor (Free Edition) has been marked as EOGA (End of General Availability). At this time, there is not an equivalent replacement product available.

Coming from a decade of vmware esxi and then a few years certified Nutanix, I was almost instantly at home clustering proxmox then added ceph across my hosts and went ‘wtf did I sell Nutanix for’. I was already running FreeNAS later truenas by then so I was already converted to hosting on Linux but seriously I was impressed.

Business case: With what you save on licensing for Nutanix or vsan, you can place all nvme ssd and run ceph.

I actually moved everything to docker containers at home… Not an apples to apples, but I don’t need so many full OSs it turns out.

At work we have a mix of things running right now to see. I don’t think we’ll land on ovirt or openstack. It seems like we’ll bite the cost bullet and move all the important services to amazon.

You are not logged in. However you can subscribe from another Fediverse account, for example Lemmy or Mastodon. To do this, paste the following into the search field of your instance: !selfhosted@lemmy.world

A place to share alternatives to popular online services that can be self-hosted without giving up privacy or locking you into a service you don’t control.

Rules:

Be civil: we’re here to support and learn from one another. Insults won’t be tolerated. Flame wars are frowned upon.

No spam posting.

Posts have to be centered around self-hosting. There are other communities for discussing hardware or home computing. If it’s not obvious why your post topic revolves around selfhosting, please include details to make it clear.

Don’t duplicate the full text of your blog or github here. Just post the link for folks to click.

Submission headline should match the article title (don’t cherry-pick information from the title to fit your agenda).

No trolling.

Resources:

selfh.st Newsletter and index of selfhosted software and apps

proxmox

/thread

This is my go-to setup.

I try to stick with libvirt/

virshwhen I don’t need any graphical interface (integrates beautifully with ansible [1]), or when I don’t need clustering/HA (libvirt does support “clustering” at least in some capability, you can live migrate VMs between hosts, manage remote hypervisors from virsh/virt-manager, etc). On development/lab desktops I bolt virt-manager on top so I have the exact same setup as my production setup, with a nice added GUI. I heard that cockpit could be used as a web interface but have never tried it.Proxmox on more complex setups (I try to manage it using ansible/the API as much as possible, but the web UI is a nice touch for one-shot operations).

Re incus: I don’t know for sure yet. I have an old LXD setup at work that I’d like to migrate to something else, but I figured that since both libvirt and proxmox support management of LXC containers, I might as well consolidate and use one of these instead.

I didn’t know libvirt supported HA

Did you read? I specifically said it didn’t, at least not out-of-the-box.

clustering != HA

The “clustering” in libvirt is limited to remote controlling multiple nodes, and migrating hosts between them. To get the High Availability part you need to set it up through other means, e.g. pacemaker and a bunch of scripts.

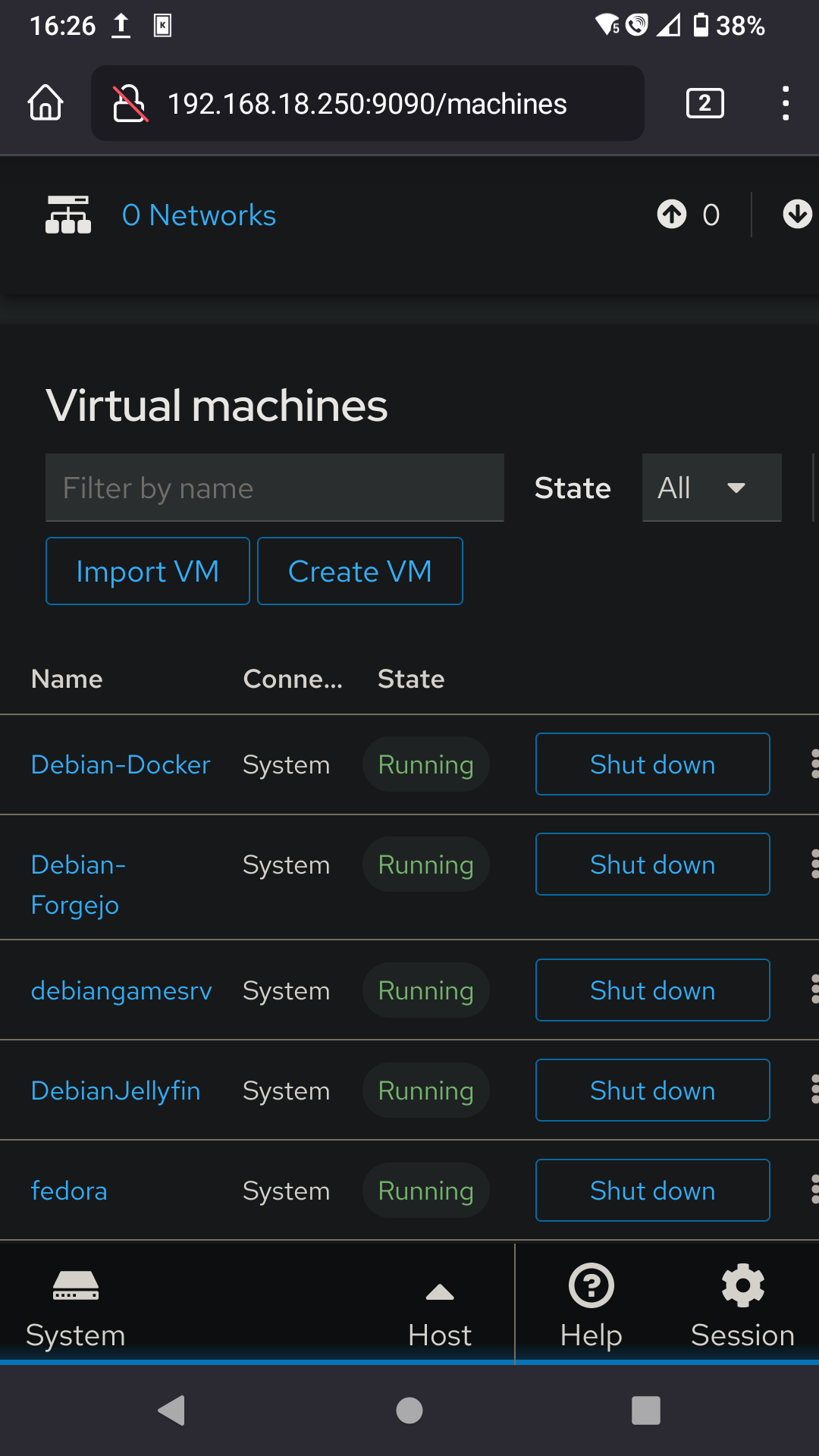

I use cockpit and my phone to start my virtual fedora, which has pcie passthrough on gpu and a usb controller.

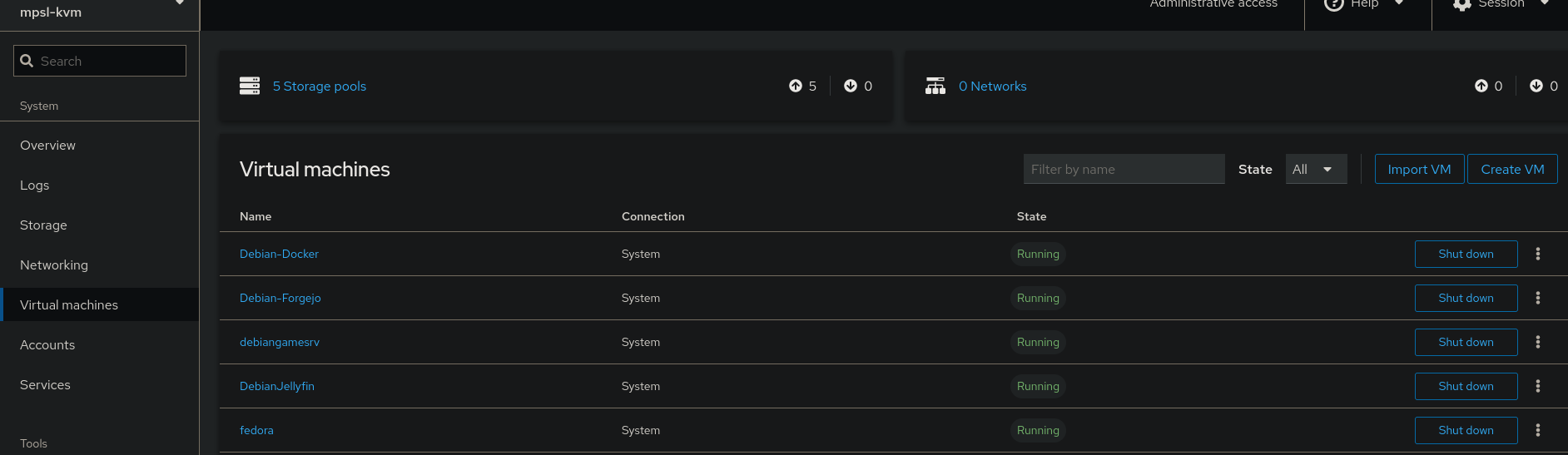

Desktop:

Mobile:

Maybe you should consider consolidating into Incus. You’re already running on LXC containers why keep using and dragging all the Proxmox bloat and potential issues when you can use LXD/Incus made by the same people who made LXC that is WAY faster, stable, more integrated and free?

Hey look, it’s the Incus guy. Every time this topic comes up, you chime in and roast Proxmox and it potential issues with a link go a previous comment roasting Proxmox and it’s potential issues and at no point go into what those potential issues are outside of the broad catch all term of ‘bloat’.

I respect your data center experience, but I wish you were more forward with your issues instead of broad, generalized terms.

As someone with much less enterprise experience, but small business it administration experience, how does Incus replace ESXi for virtual machines coming from the understanding that “containerization is the new hotness but doesn’t work for me” angle?

You funny guy 😂😂

Now, I’m on my phone so I can’t write that much but I’ll say that the post I liked to isn’t about potential issue, it goes over specific situations where it failed, ZFS, OVPN, etc. but I won’t obviously provide anyone with crash logs and kernel panics.

About ESXi: Incus provides you with a CLI and Web interface to create, manage, migrate VMs. It also provides basic clustering features. It isn’t as feature complete as ESXi but it gets the job done for most people who just want a couple of VMs. At the end of the day it is more inline with what Proxmox than what ESXi offers BUT it’s effectively free so it won’t hold important updates from users running on free licenses.

If you list what you really need in terms of features I can point you into documentation or give my opinion how how they compare and what to expect.

deleted by creator

deleted by creator

The migration is bound to happen in the next few months, and I can’t recommend moving to incus yet since it’s not in stable/LTS repositories for Debian/Ubuntu, and I really don’t want to encourage adding third-party repositories to the mix - they are already widespread in the setup I inherited (new gig), and part of a major clusterfuck that is upgrade management (or the lack of). I really want to standardize on official distro repositories. On the other hand the current LXD packages are provided by snap (…) so that would still be an improvement, I guess.

Management is already sold to the idea of Proxmox (not by me), so I think I’ll take the path of least resistance. I’ve had mostly good experiences with it in the past, even if I found their custom kernels a bit strange to start with… do you have any links/info about the way in which Proxmox kernels/packages differ from Debian stable? I’d still like to put a word of caution about that.

DO NOT migrate / upgrade anything to the snap package that package is from Canonical and it’s after the Incus fork, this means if you do for it you may never be able to then migrate to Incus and/or you’ll become hostage of Canonical.

About the rest, if you don’t want to add repositories you should migrate into LXD LTS from Debian 12 repositories. That version is and will be compatible with Incus and both the Incus and Debian teams have said that multiple times and are working on a migration path. For instance the LXD from Debian will still be able to access the Incus image server while the Canonical one won’t.

It was already in place when I came in (made me roll my eyes), and it’s a mess. As you said, there’s no proper upgrade path to anything else. So anyway…

The LXD version in Debian 12 is buggy as fuck, this patch has not even been backported https://github.com/canonical/lxd/issues/11902 and 5.0.2-5 is still affected. It was a dealbreaker in my previous tests, and doesn’t inspire confidence in the bug testing and patching process on this particular package. On top of it, It will be hard to convice other guys that we should ditch Ubuntu and their shenanigans, and that we should migrate to good old Debian (especially if the lxd package is in such a state). Some parts of the job are cool, but I’m starting to see there’s strong resistance to change, so as I said, path of least resistance.

Do you have any links/info about the way in which Proxmox kernels/packages differ from Debian stable?

So you say it is “buggy as fuck” because there’s a bug that makes it so you can’t easily run it if your locate is different than English? 😂 Anyways you can create the bride yourself and get around that.

About the link, Proxmox kernel is based on Ubuntu, not Debian…

It sends pretty bad signals when it causes a crash on the first

lxd init(sure I could make the case that there are workarounds, switch locales, create the bridge, but it doesn’t help make it appear as a better solution than proxmox). Whatever you call it, it’s a bad looking bug, and the fact that it was not patched in debian stable or backports makes me think there might be further hacks needed down the road for other stupid bugs like this one, so for now, hard pass on the Debian package (might file a bug on the bts later).Thanks for the link mate, Proxmox kernels are based on Ubuntu’s, which are in turn based on Debian’s, not arguing about that - but I was specifically referring to this comment

any example/link to bug reports for such fixes not being applied to proxmox kernels? Asking so I can raise an orange flag before it gets adopted without due consideration.

OP here. Thanks for all your input! It’s really an embarrasment of riches which will take me some time to navigate. But thats part of the fun, right? Again, thanks everyone.

For home have a crack at KVM with front ends like proxmox or canonical lxd manager.

In an enterprise environment take a look at Hyper-V or if you think you need hyper converged look at Nutanix.

Minikube and try to get everything on Kubernetes?

Kubernetes yes, but minikube is kinda meh as a way to install it outside of development environments.

There’s so many better manageable ways like RKE/Rancher (which gives you the possibility to go k3s),Kubespray or even kubeadm.

All of those will result in a cluster that’s more suitable for running actual workloads.

Where does running VMs compare in any way to what Kubernetes does?

Depends on what you want to self host? Could be worth it to see if what you self host can be deployed as containers instead

I do do not not know— however, that logo is amazing

EDIT: Found it — https://sega-ai.neocities.org/

deleted by creator

OOTL and someone who only uses a vm once every several years for shits & grins: What happened to vmware?

As part of the transition of perpetual licensing to new subscription offerings, the VMware vSphere Hypervisor (Free Edition) has been marked as EOGA (End of General Availability). At this time, there is not an equivalent replacement product available.

For further details regarding the affected products and this change, we encourage you to review the following blog post: https://blogs.vmware.com/cloud-foundation/2024/01/22/vmware-end-of-availability-of-perpetual-licensing-and-saas-services/

Whelp…boo-urns. :(

Coming from a decade of vmware esxi and then a few years certified Nutanix, I was almost instantly at home clustering proxmox then added ceph across my hosts and went ‘wtf did I sell Nutanix for’. I was already running FreeNAS later truenas by then so I was already converted to hosting on Linux but seriously I was impressed.

Business case: With what you save on licensing for Nutanix or vsan, you can place all nvme ssd and run ceph.

I actually moved everything to docker containers at home… Not an apples to apples, but I don’t need so many full OSs it turns out.

At work we have a mix of things running right now to see. I don’t think we’ll land on ovirt or openstack. It seems like we’ll bite the cost bullet and move all the important services to amazon.